Job Scheduling Policy¶

Login Nodes are for Interactive, Non-intensive Work Only¶

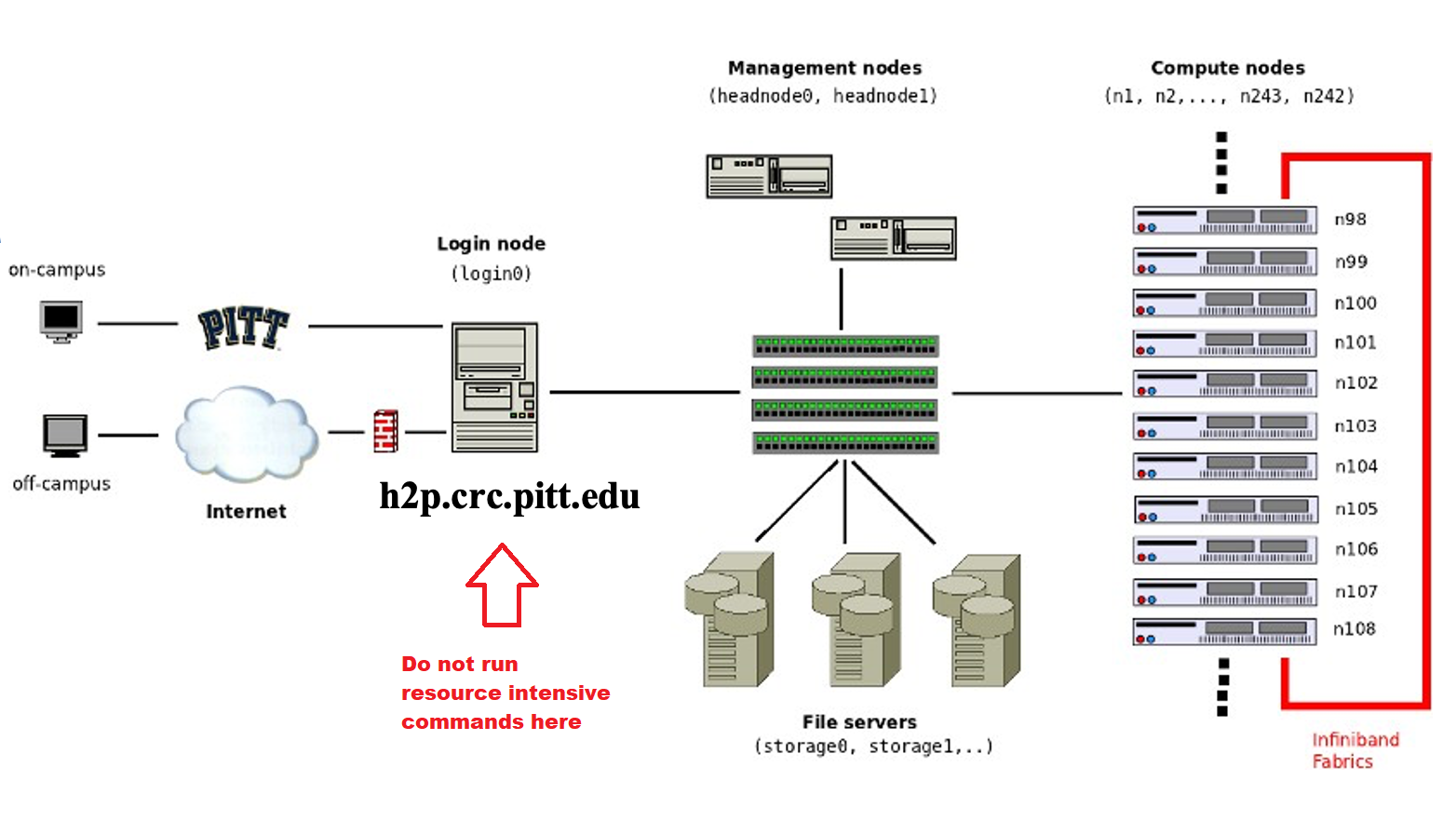

Many users are logged into the login nodes of the H2P and HTC clusters at the same time. These are the gateways everyone uses to perform interactive work like editing code, submitting and checking the status of jobs, etc.

Executing processing scripts or commands on these nodes can cause substantial slowdowns for the rest of the users. For this reason, it is important to make sure that this kind of work is done in either an interactive session on a node from one of the clusters, or as a batch job submission.

Resource-intensive processes found to be running on the login nodes may be killed at anytime.

The CRCD team reserves the right to revoke cluster access of any user who repeatedly causes slowdowns on the login nodes with processes that can otherwise be run on the compute nodes.

Jobs are Subject to Priority Queueing¶

There are settings in place within the Slurm Workload Manager that allow all groups (and users within those groups) to have equal opportunity to run calculations. This concept is called “Fair Share”. The fair share is a multiplicative factor in computing a job’s “Priority”. At Pitt, we use a multi-factor priority system which includes:

Age [0,1] – Length of time the job been in the queue and eligible to be scheduled. Longer time spent in the queue recieves higher priority. The maximum is attained at a queue time of 7 days. The priority weight for age is 2000.

Job Size [0,1] – Required Nodes, CPUs, and Memory usage. Larger requests receive increased priority. The maximum corresponds to using all the resources on the cluster. The priority weight for job size is 2000.

Quality of Service (QOS) [0,1] - Factor based on the Walltime of the job. Normalized to the highest (short QOS). The priority weight for QOS is 2000.

| Name | Priority | QOS Factor | Max Walltime (D-HH:MM:SS) |

Short |

13 | 1.00 | 1-00:00:00 |

Normal |

12 | 0.92 | 3-00:00:00 |

Long |

11 | 0.84 | 6-00:00:00 |

Long-long (on SMP and HTC) |

10 | 0.76 | 21-00:00:00 |

Fair Share [0,1] - A metric for overall usage by allocation that prioritizes jobs from under serviced slurm accounts. Less overall cluster use receives higher priority. The priority weight for fairshare is 3000.

Priority is computed as a sum of the individual factors multiplied by their priority weights. Higher priorities will be assigned resources first. Your jobs may receive pending status with Priority as the reason.

Checking Job Priority¶

You can use the sprio slurm utility to see the priority of your jobs

[nlc60@login0b ~] : sprio -M smp -u nlc60 -j 6136016

JOBID PARTITION USER PRIORITY SITE AGE FAIRSHARE JOBSIZE QOS

6136016 smp nlc60 2272 0 0 269 4 2000

Walltime Extensions will generally not be Granted¶

It is up to the job submitter to determine the demands of their job through some benchmarking before submitting, perform any necessary code optimization, and to then specify the memory, cpu, and time requirements accordingly. This ensures that the job is queued with respect to FairShare, and that CRCD resources utilized by a job are available to other users within a reasonable time frame.

Exceeding Usage Limits will cause Job Pending Status¶

After submission, a job can appear with a "status" of "PD" (not running).

There are various reasons that Slurm will put your job in a pending state. Some common explanations are listed below.

Reasons related to resource availability and job dependencies:¶

| Reason | Explanation | Resolution |

| Resources | The cluster is busy and no resources are currently available for your job. | Your job will run as soon as the resources requested become available |

| Priority | See section on job priority | Your job will run as soon as it has reached a high enough priority |

| Dependency | A job cannot start until another job is finished. This only happens if you included a "--dependency" directive in your Slurm script. | Wait until the job that you have marked as a dependency is finished, then your job will run |

| DependencyNeverSatisfied | A job cannot start because another job on which it depends failed | Please cancel this job, as it will never be able to run. You will need to resolve the issues in the job that has been marked as a dependency. |

Reasons related to exceeding a usage limit:¶

JobArrayTaskLimit, QOSMaxJobsPerUserLimit and QOSMaxJobsPerAccountLimit¶

One or more of your jobs have exceeded limits in place on the number of jobs you can have in the queue that are actively accruing priority. Jobs with this status will remain in the queue, but will not being accruing priority until other jobs from the submitting user have completed.

In most cases the per-account limit is 500 jobs, and the per-user limit is 100 jobs. You can use

sacctmgr show qos format=Name%20,MaxJobsPA,MaxJobsPU,MaxSubmitJobsPA,MaxSubmitJobsPU,MaxTresPA%20 to view the limits

for any given QOS.

The maximum job array size is 500 on SMP, MPI, and HTC, 1001 on GPU. The array size limits are defined at the cluster configuration level:

[nlc60@login1 ~] : for cluster in smp mpi gpu htc; do echo $cluster; scontrol -M $cluster show config | grep MaxArraySize; done

smp

MaxArraySize = 500

mpi

MaxArraySize = 500

gpu

MaxArraySize = 1001

htc

MaxArraySize = 500

These limits exist to prevent users who batch submit large quantities of jobs in a loop or job array from having all of their jobs at a higher priority than one-off submissions simply due to having submitted them all at once.

A hard limit on the maximum number of submitted jobs (including in a job array) is 1000. This separate limit exists to prevent any one user from overwhelming the workload manager with a singular, very large request for resources.

MaxMemoryPerAccount¶

The job exceeds the current within-group memory quota. The maximum quota available depends on the cluster and partition. The table below gives the maximum memory (in GB) for each QOS in the clusters/partitions it is defined.

| Cluster | Partition | Short | Normal | Long |

| smp | smp | 13247 | 11044 | 9999 |

| legacy | 620 | 620 | 620 | |

| high-mem | 6512 | 6512 | 6512 |

If you find yourself consistently running into this issue, you can use the crc-seff tool to determine the efficiency of

your completed jobs:

[chx33@login1 ~]$ crc-seff -M mpi 2707801

Job ID: 2707801

Cluster: mpi

User/Group: hillier/dhillier

State: TIMEOUT (exit code 0)

Nodes: 4

Cores per node: 48

CPU Utilized: 4-00:48:03

CPU Efficiency: 99.84% of 4-00:57:36 core-walltime

Job Wall-clock time: 00:30:18

Memory Utilized: 8.11 GB

Memory Efficiency: 2.16% of 375.00 GB

AssocGrpBillingMinutes¶

- Your group's Allocation ("service units") usage has surpassed the limit specified in your active resource Allocation,

or your active Allocations have expired. You can double-check this with

crc-usage. Please submit a new Resource Allocation Request following our guidelines.

MaxTRESPerAccount, MaxCpuPerAccount, or MaxGRESPerAccount¶

In the table below, the group based CPU (GPUs for the gpu cluster) limits are presented for each QOS walltime length. If your group requests more CPU/GPUs than in this table you will be forced to wait until your group's jobs finish.

| Cluster | Partition | Short QOS (1 Day) | Normal QOS (3 Days) | Long QOS (6 Days) | Long-long QOS (21 Days) |

|---|---|---|---|---|---|

| smp | smp | 2304 | 1613 | 1152 | 461 |

| high-mem | 320 | 224 | 160 | ||

| gpu | a100 | 16 | 12 | 8 | |

| a100_multi | 32 | 24 | 8 | ||

| a100_nvlink | 24 | 16 | 8 | ||

| l40s | 24 | 16 | 8 | ||

| mpi | mpi | 3264 | 2285 | 1632 | |

| htc | htc | 1536 | 1075 | 768 | 307 |

| teach | cpu | 1152 | 1152 | 1152 | 1152 |

| teach | gpu | 24 | 24 | 24 | 24 |